|

Getting your Trinity Audio player ready...

|

Shadow AI is no longer a fringe phenomenon. A Microsoft survey found that 78% of AI users bring their own tools to the workplace, and data shows that nearly 60% of users rely on unmanaged AI apps. The risks are real – and so are the consequences: according to IBM’s 2025 Cost of a Data Breach report, one in five organizations has already suffered a breach tied to shadow AI.

It’s the same pattern that was seen with Shadow IT in the early days of cloud. Only this time, the tools are smarter, adoption rates are faster, and there’s still a lot we don’t know about the actual risks of AI in production environments. What we do know is that it’s pervasive: XM Cyber’s early research across over a hundred organizations spanning industries from finance and healthcare to manufacturing and government found that over 80% showed signs of Shadow AI activity.

In this blog, we’ll drill down into what Shadow AI is, why traditional security tools are blind to it, and how organizations can start closing the gaps before exposures turn into incidents.

What Is Shadow AI?

Shadow AI is the use of artificial intelligence tools inside an organization without approval or oversight from IT or security teams.

In practice, Shadow AI usually takes the form of browser-based AI services like Gemini, ChatGPT, Claude, or Copilot. It also includes AI-enabled applications installed on endpoints and Model Context Protocol (MCP) servers that developers use to connect AI into their workflows.

Shadow AI differs from Shadow IT – unapproved devices, applications, or cloud services that expand the attack surface and circumvent governance. The risks from Shadow AI run deeper. These tools can absorb sensitive data – including proprietary code, customer PII, financial models, and even credentials stored in MCP configuration files – and leave no trace in logs or audit trails. Because AI services can be adopted without formal deployment, approval or even involvement by IT or security, their use can spread quickly across organizations. And even if the risk from Shadow AI results from lack of awareness rather than malicious intent – it’s still a very real risk.

Our customers have made it clear they want to get ahead of this risk. They asked us to help them uncover AI activity in their environments – even the tools they did not know were there. In response, we are developing Shadow AI detection capabilities in our exposure management platform as part of our strategy for protecting organizations from the risks of AI. With this inclusion, we will cover the widest range of attack surfaces – from on-prem to cloud, to Kubernetes, OT and now, Shadow AI. Our early research and customer telemetry surfaced four critical insights about Shadow AI, notably:

- Shadow AI Is Nearly Ubiquitous

XM Cyber research found that more than 80% of the researched organizations showed signs of Shadow AI activity. The detected activity spanned every corner of the business. Sales teams entered sensitive customer data into ChatGPT, HR uploaded resumes into Claude, and executives used AI for strategic planning. The broader market shows the same trajectory. As mentioned above, Microsoft reports that 78% of AI users bring their own tools into the workplace. And IBM found that 20% of organizations experienced breaches tied to Shadow AI, with each incident adding an average of $670,000 to breach costs.

- Your Security Stack Is Flying AI Blind

XM Cyber research found that traditional security tools missed the majority of Shadow AI activity. Encrypted browser traffic concealed AI interactions from network DLP and CASB tools, and browser logging proved ineffective on unmanaged devices. Endpoint browser history revealed usage that legacy tools did not detect. Based on this testing, XM Cyber concluded that 70–80% of Shadow AI traffic evades traditional monitoring. Other research backs this up, finding that encrypted channels hide AI data flows and create blind spots that allow Shadow AI to operate unseen by conventional security controls.

- Developer Environments Are the New Wild West

XM Cyber identified credential exposure in AI-assisted development workflows. Model Context Protocol (MCP) servers frequently stored API keys and tokens in configuration files. When these files were exposed or synced to shared repositories, they created direct paths for data exfiltration and lateral movement. The OWASP Top 10 for LLM Applications highlights the same issues, warning that poor configuration practices often lead to sensitive information disclosure, including leaked credentials.

- Compliance Is a Paper Tiger Against Shadow AI

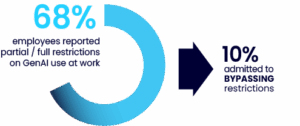

XM Cyber telemetry showed Shadow AI activity in highly regulated sectors – healthcare, finance, and consulting – where compliance frameworks were firmly in place. Despite this, employees still uploaded sensitive data into unmanaged AI services. Recent enterprise survey data confirms this trend: 68% of employees reported partial or full restrictions on GenAI use at work, yet nearly 10% admitted to bypassing those restrictions to continue using external tools.

Where the Research Goes Next

Our early telemetry provided a first view of Shadow AI in the enterprise. The next phase is to refine how we measure it. Several parameters stand out as especially important moving forward and should be monitored closely:

- Adoption rate – How quickly new AI tools move from a single user to an entire department or tenant.

- Role-based exposure – Which functions see the most unmanaged use, from developers and executives to sales and HR.

- Secrets in code – How often MCP servers and development workflows expose API keys, tokens, and other credentials.

- Tool sprawl – The number of distinct AI services in use per endpoint and per organization.

- Missed activity – The share of Shadow AI that slips past traditional controls like DLP, CASB, and SWG.

These parameters will shape how organizations understand the real impact of Shadow AI and learn where to focus their defenses.

A Strategy for Managing AI Exposures

AI has become part of everyday business activity and is a business enabler across industries. Organizations need to be familiar with the risks of employees using unmanaged tools across functions, encrypted channels hiding the traffic from inspection, and developer environments leaking credentials that attackers can exploit. By continuously monitoring AI usage as part of overall enterprise exposure, organizations can track its spread and build defenses that stay connected to real business priorities.

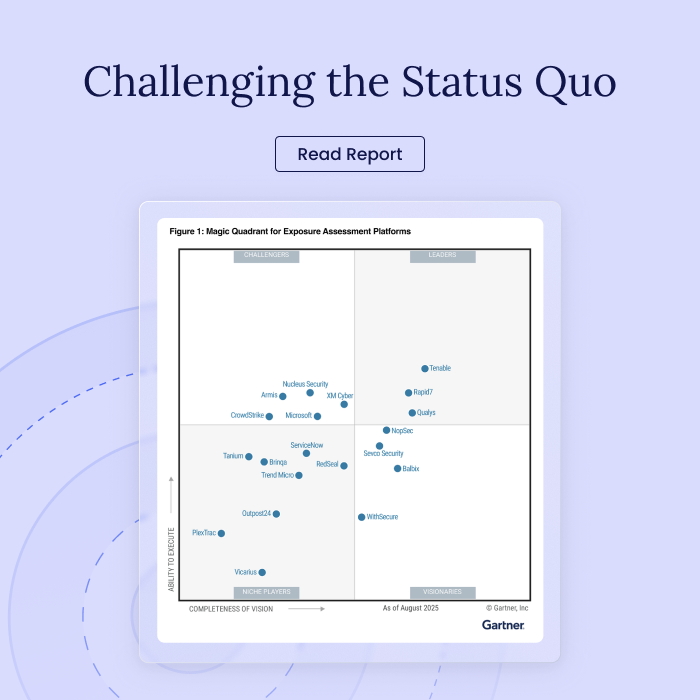

While many vendors are joining the hype around AI security, it’s not always clear how they protect organizations from the risks of AI. XM Cyber is extending its Continuous Exposure Management platform to the AI attack surface to prevent high impact attacks that leverage AI exposures. In addition to discovering and alerting on Shadow AI, XM Cyber is also adding capabilities of discovering AI credential harvesting techniques, discovering exposures in cloud AI managed services like Amazon Bedrock, Google Vertex, and Azure OpenAI, and measuring the risk from/to devices that run MCP servers. In addition, XM Cyber is extending its platform to continuously discover and alert on violations of relevant compliance frameworks like the EU AI Regulation and the NIST AI Risk Management.

AI usage across organizations is only going to increase. Now is the time to ensure your organization is equipped with the clarity it needs to uncover blindspots and establish comprehensive plans for long term resilience.